Overview

AI agent tool output displays should be intentional on what displays to the end user. Displayed outputs should always be relevant to users so they can be informed on how the system is reasoning, what the system is doing, and what it is finding.

Introducing tool output

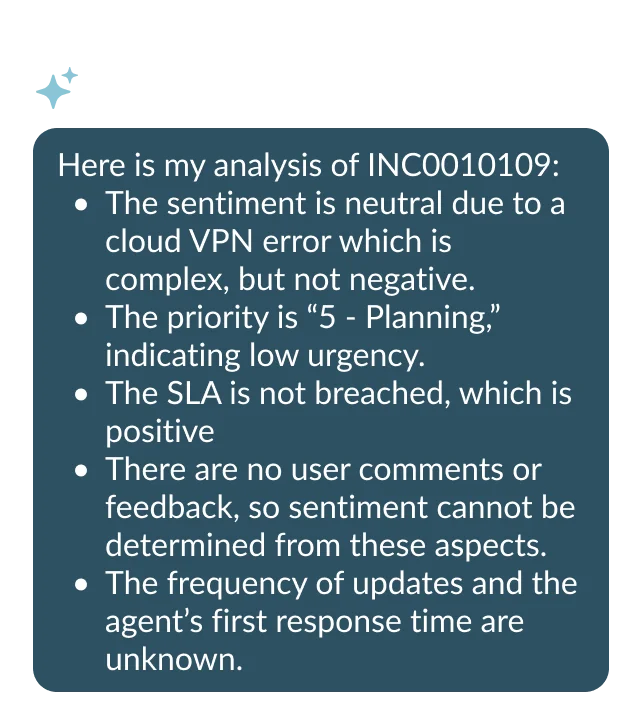

Before surfacing a recommendation, it can be helpful to show some of the reasoning that informs those recommendations to help the user make informed decisions. Always introduce this content in a conversational way that helps the user understand the purpose of this content and quickly scan through important details.

Provide an introductory statement that provides context setting and format the output in a way that is easy to read.

Provide reasoning without introductory language or display reasoning in one large paragraph.

Surfacing relevant output

Finding the right balance between providing just enough reasoning and avoiding irrelevant explanations is crucial. Too little context can make decisions seem arbitrary, while excessive or unnecessary reasoning can overwhelm users and erode trust. A well-designed output should offer explanations that provide just the right amount of insight to enhance understanding without distraction.

Provide a limited amount of useful information that can help the user make a decision.

Provide a long list of reasoning content that includes context that doesn’t help the user make a decision.

Formatting the output

AI agent outputs may not be as helpful if the formatting is lacking. It’s important to display responses that remove unnecessary noise, provide context setting, and are easily scannable.

Set context with an opening statement, separate actions into bullet points for scannability, and when necessary, add an interrogative sentence that calls the user to respond. Note: Separate message for “supervised” question coming in later release.

Leave outputs unformatted. Lacking grammar and structure hinders usability and trust.

Conversation and context

Whenever possible, tool outputs should have an introduction as well as include relevant sources/citations. This helps promote our AI principles of trust and transparency.

Lead responses in with an introduction, and cite sources using visual indicators as well as links.

Provide responses without introductions and citations, which remove the conversational aspect and is less transparent on the LLM source.